分布式服务注册发现与统一配置管理之 Consul

每天早上七点三十,准时推送干货Hello 大家好,我是阿粉,前面的文章给大家介绍过 Nacos,用于服务注册发现和管理配置的开源组件,今天给大家分享另一个组件 Consul 也有相应的功...

每天早上七点三十,准时推送干货

Hello 大家好,我是阿粉,前面的文章给大家介绍过 Nacos,用于服务注册发现和管理配置的开源组件,今天给大家分享另一个组件 Consul 也有相应的功能,我们一起来看一下吧!

背景

目前分布式系统架构已经基本普及,很多项目都是基于分布式架构的,以往的单机模式基本已经不适应当下互联网行业的发展。随着分布式项目的普及,项目服务实例数目的增加,服务的注册与发现功能就成了一项必不可少的架构。服务的注册与发现的功能,有很多开源方案。包括早期的zookeeper,百度的disconf,阿里的diamond,基于Go语言的ETCD,Spring集成的Eureka,以及前文提到的 Nacos 还有本文的主角Consul。这里不对上面提到的进行比较,本文仅介绍Consul,详细的对比,说明网上有很多资料,可以参考,例如:服务发现比较:Consul vs Zookeeper vs Etcd vs Eureka说到服务的注册与发现主要是下面两个主要功能:

-

服务注册与发现

-

配置中心即分布式项目统一配置管理

Consul 简介

-

Consul采用Go语言开发

-

Consul内置服务注册与发现框架、分布一致性协议实现、健康检查、Key/Value存储、多数据中心方案;不依赖其他工具,安装包包含一个可执行文件

-

支持DNS、HTTP协议接口

-

自带web-ui

-

Consul是 HashiCorp 公司推出的开源工具,用于实现分布式系统的服务发现与配置。

-

Consul支持两种服务注册的方式,一种是通过Consul的服务注册HTTP API,由服务自身在启动后调用API注册自己,另外一种则是通过在配置文件中定义服务的方式进行注册。Consul文档中建议使用后面一种方式来做服务 配置和服务注册。

Consul 服务端配置使用

-

下载相应版本解压,并将可执行文件复制到/usr/local/consul目录下

-

创建一个service的配置文件

silence$ sudo mkdir /etc/consul.d silence$ echo '{"service":{"name": "web", "tags": ["rails"], "port": 80}}' | sudo tee /etc/consul.d/web.json -

启动代理

silence$ /usr/local/consul/consul agent -dev -node consul_01 -config-dir=/etc/consul.d/ -ui-dev 参数代表本地测试环境启动;-node 参数表示自定义集群名称;-config-drir 参数表示services的注册配置文件目录,即上面创建的文件夹-ui 启动自带的web-ui管理页面

-

集群成员查询方式

silence-pro:~ silence$ /usr/local/consul/consul members -

HTTP协议数据查询

silence-pro:~ silence$ curl http://127.0.0.1:8500/v1/catalog/service/web [ { "ID": "ab1e3577-1b24-d254-f55e-9e8437956009", "Node": "consul_01", "Address": "127.0.0.1", "Datacenter": "dc1", "TaggedAddresses": { "lan": "127.0.0.1", "wan": "127.0.0.1" }, "NodeMeta": { "consul-network-segment": "" }, "ServiceID": "web", "ServiceName": "web", "ServiceTags": [ "rails" ], "ServiceAddress": "", "ServicePort": 80, "ServiceEnableTagOverride": false, "CreateIndex": 6, "ModifyIndex": 6 } ] silence-pro:~ silence$ -

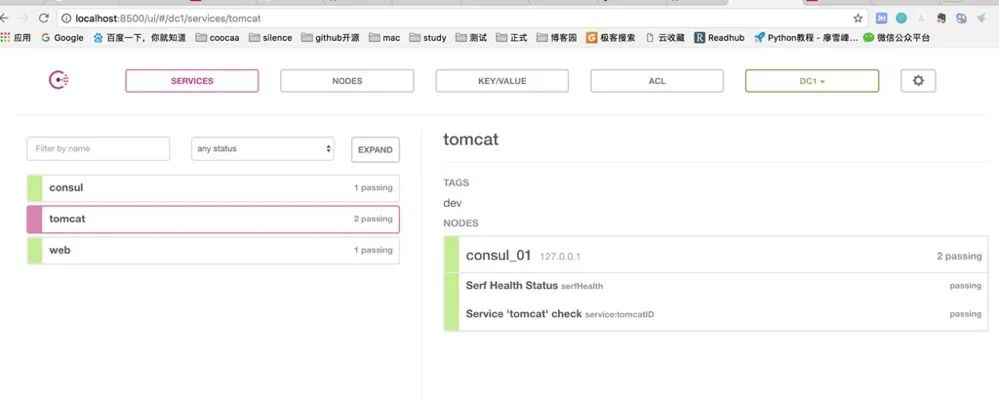

web-ui管理

Consul Web UI

Consul的web-ui可以用来进行服务状态的查看,集群节点的检查,访问列表的控制以及KV存储系统的设置,相对于Eureka和ETCD,Consul的web-ui要好用的多。(Eureka和ETCD将在下一篇文章中简单介绍。)

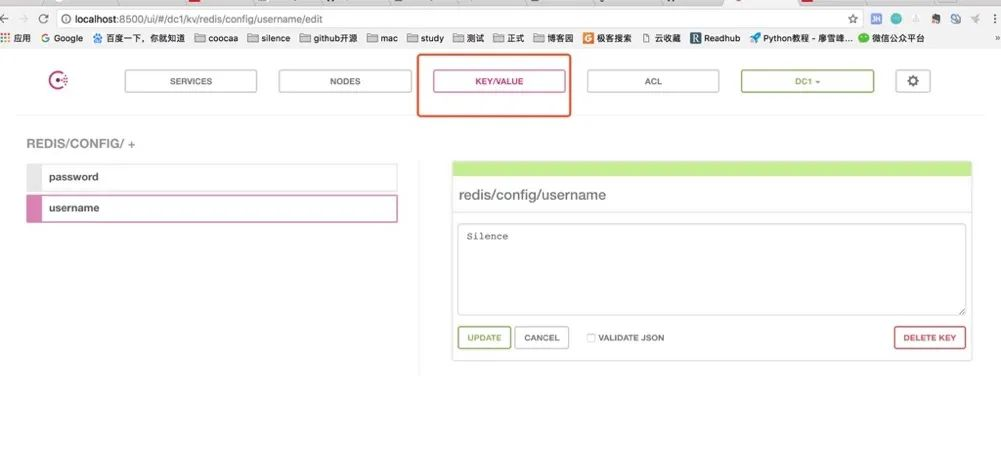

7. KV存储的数据导入和导出

silence-pro:consul silence$ ./consul kv import @temp.json

silence-pro:consul silence$ ./consul kv export redis/

temp.json文件内容格式如下,一般是管理页面配置后先导出保存文件,以后需要再导入该文件

[

{

"key": "redis/config/password",

"flags": 0,

"value": "MTIzNDU2"

},

{

"key": "redis/config/username",

"flags": 0,

"value": "U2lsZW5jZQ=="

},

{

"key": "redis/zk/",

"flags": 0,

"value": ""

},

{

"key": "redis/zk/password",

"flags": 0,

"value": "NDU0NjU="

},

{

"key": "redis/zk/username",

"flags": 0,

"value": "ZGZhZHNm"

}

]

Consul的KV存储系统是一种类似zk的树形节点结构,用来存储相关key/value键值对信息的,我们可以使用KV存储系统来实现上面提到的配置中心,将统一的配置信息保存在KV存储系统里面,方便各个实例获取并使用同一配置。而且更改配置后各个服务可以自动拉取最新配置,不需要重启服务。

Consul Java 客户端使用

-

maven pom依赖增加,版本可自由更换

<dependency> <groupId>com.orbitz.consul</groupId> <artifactId>consul-client</artifactId> <version>0.12.3</version> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-actuator</artifactId> </dependency> -

Consul 基本工具类,根据需要相应扩展

package com.coocaa.consul.consul.demo; import com.google.common.base.Optional; import com.google.common.net.HostAndPort; import com.orbitz.consul.*; import com.orbitz.consul.model.agent.ImmutableRegCheck; import com.orbitz.consul.model.agent.ImmutableRegistration; import com.orbitz.consul.model.health.ServiceHealth; import java.net.MalformedURLException; import java.net.URI; import java.util.List; public class ConsulUtil { private static Consul consul = Consul.builder().withHostAndPort(HostAndPort.fromString("127.0.0.1:8500")).build(); /** * 服务注册 */ public static void serviceRegister() { AgentClient agent = consul.agentClient(); try { /** * 注意该注册接口: * 需要提供一个健康检查的服务URL,以及每隔多长时间访问一下该服务(这里是3s) */ agent.register(8080, URI.create("http://localhost:8080/health").toURL(), 3, "tomcat", "tomcatID", "dev"); } catch (MalformedURLException e) { e.printStackTrace(); } } /** * 服务获取 * * @param serviceName */ public static void findHealthyService(String serviceName) { HealthClient healthClient = consul.healthClient(); List<ServiceHealth> serviceHealthList = healthClient.getHealthyServiceInstances(serviceName).getResponse(); serviceHealthList.forEach((response) -> { System.out.println(response); }); } /** * 存储KV */ public static void storeKV(String key, String value) { KeyValueClient kvClient = consul.keyValueClient(); kvClient.putValue(key, value); } /** * 根据key获取value */ public static String getKV(String key) { KeyValueClient kvClient = consul.keyValueClient(); Optional<String> value = kvClient.getValueAsString(key); if (value.isPresent()) { return value.get(); } return ""; } /** * 找出一致性的节点(应该是同一个DC中的所有server节点) */ public static List<String> findRaftPeers() { StatusClient statusClient = consul.statusClient(); return statusClient.getPeers(); } /** * 获取leader */ public static String findRaftLeader() { StatusClient statusClient = consul.statusClient(); return statusClient.getLeader(); } public static void main(String[] args) { AgentClient agentClient = consul.agentClient(); agentClient.deregister("tomcatID"); } }temp.json 和 ConsulUtil.java 文件以及上传到 GitHub 资源库,回复【源码仓库】获取代码地址。

-

通过上面的基本工具类可以实现服务的注册和KV数据的获取与存储功能

Consul集群搭建

参考地址 Consul集群搭建 http://ju.outofmemory.cn/entry/189899

-

三台主机Consul下载安装,我这里没有物理主机,所以通过三台虚拟机来实现。虚拟机IP分192.168.231.145,192.168.231.146,192.168.231.147

-

将145和146两台主机作为Server模式启动,147作为Client模式启动,Server和Client只是针对Consul集群来说的,跟服务没有任何关系!

-

Server模式启动145,节点名称设为n1,数据中心统一用dc1

[root@centos145 consul]# ./consul agent -server -bootstrap-expect 2 -data-dir /tmp/consul -node=n1 -bind=192.168.231.145 -datacenter=dc1 bootstrap_expect = 2: A cluster with 2 servers will provide no failure tolerance. See https://www.consul.io/docs/internals/consensus.html#deployment-table bootstrap_expect > 0: expecting 2 servers ==> Starting Consul agent... ==> Consul agent running! Version: 'v1.0.1' Node ID: '6cc74ff7-7026-cbaa-5451-61f02114cd25' Node name: 'n1' Datacenter: 'dc1' (Segment: '<all>') Server: true (Bootstrap: false) Client Addr: [127.0.0.1] (HTTP: 8500, HTTPS: -1, DNS: 8600) Cluster Addr: 192.168.231.145 (LAN: 8301, WAN: 8302) Encrypt: Gossip: false, TLS-Outgoing: false, TLS-Incoming: false ==> Log data will now stream in as it occurs: 2017/12/06 23:26:21 [INFO] raft: Initial configuration (index=0): [] 2017/12/06 23:26:21 [INFO] serf: EventMemberJoin: n1.dc1 192.168.231.145 2017/12/06 23:26:21 [INFO] serf: EventMemberJoin: n1 192.168.231.145 2017/12/06 23:26:21 [INFO] agent: Started DNS server 127.0.0.1:8600 (udp) 2017/12/06 23:26:21 [INFO] raft: Node at 192.168.231.145:8300 [Follower] entering Follower state (Leader: "") 2017/12/06 23:26:21 [INFO] consul: Adding LAN server n1 (Addr: tcp/192.168.231.145:8300) (DC: dc1) 2017/12/06 23:26:21 [INFO] consul: Handled member-join event for server "n1.dc1" in area "wan" 2017/12/06 23:26:21 [INFO] agent: Started DNS server 127.0.0.1:8600 (tcp) 2017/12/06 23:26:21 [INFO] agent: Started HTTP server on 127.0.0.1:8500 (tcp) 2017/12/06 23:26:21 [INFO] agent: started state syncer 2017/12/06 23:26:28 [ERR] agent: failed to sync remote state: No cluster leader 2017/12/06 23:26:30 [WARN] raft: no known peers, aborting election 2017/12/06 23:26:49 [ERR] agent: Coordinate update error: No cluster leader 2017/12/06 23:26:54 [ERR] agent: failed to sync remote state: No cluster leader 2017/12/06 23:27:24 [ERR] agent: Coordinate update error: No cluster leader 2017/12/06 23:27:27 [ERR] agent: failed to sync remote state: No cluster leader 2017/12/06 23:27:56 [ERR] agent: Coordinate update error: No cluster leader 2017/12/06 23:28:02 [ERR] agent: failed to sync remote state: No cluster leader 2017/12/06 23:28:27 [ERR] agent: failed to sync remote state: No cluster leader 2017/12/06 23:28:33 [ERR] agent: Coordinate update error: No cluster leader目前只启动了145,所以还没有集群

-

Server模式启动146,节点名称用n2,并在n2上启用了web-ui管理页面功能

[root@centos146 consul]# ./consul agent -server -bootstrap-expect 2 -data-dir /tmp/consul -node=n2 -bind=192.168.231.146 -datacenter=dc1 -ui bootstrap_expect = 2: A cluster with 2 servers will provide no failure tolerance. See https://www.consul.io/docs/internals/consensus.html#deployment-table bootstrap_expect > 0: expecting 2 servers ==> Starting Consul agent... ==> Consul agent running! Version: 'v1.0.1' Node ID: 'eb083280-c403-668f-e193-60805c7c856a' Node name: 'n2' Datacenter: 'dc1' (Segment: '<all>') Server: true (Bootstrap: false) Client Addr: [127.0.0.1] (HTTP: 8500, HTTPS: -1, DNS: 8600) Cluster Addr: 192.168.231.146 (LAN: 8301, WAN: 8302) Encrypt: Gossip: false, TLS-Outgoing: false, TLS-Incoming: false ==> Log data will now stream in as it occurs: 2017/12/06 23:28:30 [INFO] raft: Initial configuration (index=0): [] 2017/12/06 23:28:30 [INFO] serf: EventMemberJoin: n2.dc1 192.168.231.146 2017/12/06 23:28:31 [INFO] serf: EventMemberJoin: n2 192.168.231.146 2017/12/06 23:28:31 [INFO] raft: Node at 192.168.231.146:8300 [Follower] entering Follower state (Leader: "") 2017/12/06 23:28:31 [INFO] consul: Adding LAN server n2 (Addr: tcp/192.168.231.146:8300) (DC: dc1) 2017/12/06 23:28:31 [INFO] consul: Handled member-join event for server "n2.dc1" in area "wan" 2017/12/06 23:28:31 [INFO] agent: Started DNS server 127.0.0.1:8600 (tcp) 2017/12/06 23:28:31 [INFO] agent: Started DNS server 127.0.0.1:8600 (udp) 2017/12/06 23:28:31 [INFO] agent: Started HTTP server on 127.0.0.1:8500 (tcp) 2017/12/06 23:28:31 [INFO] agent: started state syncer 2017/12/06 23:28:38 [ERR] agent: failed to sync remote state: No cluster leader 2017/12/06 23:28:39 [WARN] raft: no known peers, aborting election 2017/12/06 23:28:57 [ERR] agent: Coordinate update error: No cluster leader 2017/12/06 23:29:11 [ERR] agent: failed to sync remote state: No cluster leader 2017/12/06 23:29:30 [ERR] agent: Coordinate update error: No cluster leader 2017/12/06 23:29:38 [ERR] agent: failed to sync remote state: No cluster leader 2017/12/06 23:29:57 [ERR] agent: Coordinate update error: No cluster leader同样没有集群发现,此时n1和n2都启动起来,但是互相并不知道集群的存在!

-

将n1节点加入n2

[silence@centos145 consul]$ ./consul join 192.168.231.146此时n1和n2都打印发现了集群的日志信息

-

这个时候n1和n2两个节点已经是一个集群里面的Server模式的节点了

-

Client模式启动147

[root@centos147 consul]# ./consul agent -data-dir /tmp/consul -node=n3 -bind=192.168.231.147 -datacenter=dc1 ==> Starting Consul agent... ==> Consul agent running! Version: 'v1.0.1' Node ID: 'be7132c3-643e-e5a2-9c34-cad99063a30e' Node name: 'n3' Datacenter: 'dc1' (Segment: '') Server: false (Bootstrap: false) Client Addr: [127.0.0.1] (HTTP: 8500, HTTPS: -1, DNS: 8600) Cluster Addr: 192.168.231.147 (LAN: 8301, WAN: 8302) Encrypt: Gossip: false, TLS-Outgoing: false, TLS-Incoming: false ==> Log data will now stream in as it occurs: 2017/12/06 23:36:46 [INFO] serf: EventMemberJoin: n3 192.168.231.147 2017/12/06 23:36:46 [INFO] agent: Started DNS server 127.0.0.1:8600 (udp) 2017/12/06 23:36:46 [INFO] agent: Started DNS server 127.0.0.1:8600 (tcp) 2017/12/06 23:36:46 [INFO] agent: Started HTTP server on 127.0.0.1:8500 (tcp) 2017/12/06 23:36:46 [INFO] agent: started state syncer 2017/12/06 23:36:46 [WARN] manager: No servers available 2017/12/06 23:36:46 [ERR] agent: failed to sync remote state: No known Consul servers 2017/12/06 23:37:08 [WARN] manager: No servers available 2017/12/06 23:37:08 [ERR] agent: failed to sync remote state: No known Consul servers 2017/12/06 23:37:36 [WARN] manager: No servers available 2017/12/06 23:37:36 [ERR] agent: failed to sync remote state: No known Consul servers 2017/12/06 23:38:02 [WARN] manager: No servers available 2017/12/06 23:38:02 [ERR] agent: failed to sync remote state: No known Consul servers 2017/12/06 23:38:22 [WARN] manager: No servers available 2017/12/06 23:38:22 [ERR] agent: failed to sync remote state: No known Consul servers -

在n3上面将节点n3加入集群

[silence@centos147 consul]$ ./consul join 192.168.231.145 -

再次查看集群节点信息

-

此时三个节点的Consul集群搭建成功了!其实n1和n2是Server模式启动,n3是Client模式启动。

-

关于Consul的Server模式和Client模式主要的区别是这样的,一个Consul集群通过启动的参数

-bootstrap-expect来控制这个集群的Server节点个数,Server模式的节点会维护集群的状态,并且如果某个Server节点退出了集群,则会触发Leader重新选举机制,在会剩余的Server模式节点中重新选举一个Leader;而Client模式的节点的加入和退出很自由。 -

在n2中启动web-ui

最后说两句(求关注)

最近大家应该发现微信公众号信息流改版了吧,再也不是按照时间顺序展示了。这就对阿粉这样的坚持的原创小号主,可以说非常打击,阅读量直线下降,正反馈持续减弱。

所以看完文章,哥哥姐姐们给阿粉来个在看吧,让阿粉拥有更加大的动力,写出更好的文章,拒绝白嫖,来点正反馈呗~。

如果想在第一时间收到阿粉的文章,不被公号的信息流影响,那么可以给Java极客技术设为一个星标。

最后感谢各位的阅读,才疏学浅,难免存在纰漏,如果你发现错误的地方,由于本号没有留言功能,还请你在后台留言指出,我对其加以修改。

最后谢谢大家支持~

最最后,重要的事再说一篇~

快来关注我呀~

快来关注我呀~

快来关注我呀~

< END >

如果大家喜欢我们的文章,欢迎大家转发,点击在看让更多的人看到。也欢迎大家热爱技术和学习的朋友加入的我们的知识星球当中,我们共同成长,进步。

往期精彩回顾

更多推荐

已为社区贡献1条内容

已为社区贡献1条内容

所有评论(0)